- Estado

Trending Tags

- Municipios

- México y el Mundo

- Política

- Opinión

Trending Tags

- Más…

Trending Tags

- Estado

Trending Tags

- Municipios

- México y el Mundo

- Política

- Opinión

Trending Tags

- Más…

Trending Tags

MINUTO A MINUTO

NOTICIAS DEL ESTADO

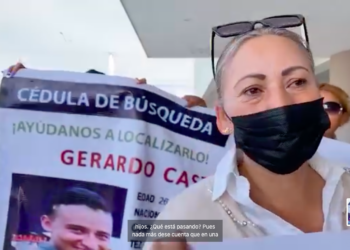

Madres buscadoras toman presidencia de Fresnillo; hay 30 desaparecidos en una semana

Debido a que en una semana se han generado más de 30 desapariciones de personas en Fresnillo, madres buscadoras se...

Leer más..SEGURIDAD

Madres buscadoras toman presidencia de Fresnillo; hay 30 desaparecidos en una semana

Debido a que en una semana se han generado más de 30 desapariciones de personas en Fresnillo, madres buscadoras se plantaron y se manifestaron en la presidencia municipal, en demanda...

Leer más..LA NOTA DEL DÍA

Israel capta como derriban drones de Irán en medio de ataque

Cientos de usuarios en redes sociales captaron el momento en que Irán atacó con drones y misiles a Israel. Momentos de terror y caos compartieron en plataformas como Facebook, X e Instagram para dar cuenta del...

Leer más..EDUCACIÓN

Maestros estatales demandan aumento salarial y toman las instalaciones de la SEC

Esta mañana docentes de preparatorias estatales tomaron la Secretaría de Educación del gobierno del estado, en demanda de certeza laboral, salarios dignos y otorgamiento de plazas a maestros con más antigüedad. José Antonio Villa ,docente...

Leer más..CULTURA Y SOCIEDAD

TINTA INDELEBLE

REDES SOCIALES, POLITICA Y MAS: La dimensión de la elección 2024

Por: Daniel Molina Solo para empezar se elegirá en esta elección 2024, el cargo de presidente, quinientos diputados, ciento veintiocho...

Código político: Claudia y la austeridad para las universidades

Por Juan Gómez La educación en general pero las universidades públicas en lo particular, han sido las instituciones educativas que...

UN GOBIERNO PARA TODAS Y TODOS

Esperanzador es el mensaje que Xóchitl Gálvez dio en el Primer Debate Presidencial. Fue claro y contundente su compromiso de...

MÉXICO Y EL MUNDO

Israel capta como derriban drones de Irán en medio de ataque

Cientos de usuarios en redes sociales captaron el momento en que Irán atacó con drones y misiles a Israel. Momentos de...

Arturo Zaldívar es investigado por orden de la ministra Piña; él responde que es “algo inédito”

El Consejo de la Judicatura Federal (CJF) investiga al ministro en retiro Arturo Zaldívar Lelo de Larrea y a excolaboradores cercanos por...

El Tiempo

Las populares de la semana

-

Madres buscadoras toman presidencia de Fresnillo; hay 30 desaparecidos en una semana

0 Interacciones -

Sheinbaum cae tras bailar banda en Mazatlán

0 Interacciones -

Ellos son los 14 candidatos que buscan la alcaldía de Guadalupe

0 Interacciones -

REDES SOCIALES, POLITICA Y MAS: La dimensión de la elección 2024

0 Interacciones -

La asociación Manuel Ortega “El pariente” se suma a la campaña de Alfredo Femat

0 Interacciones

Pórtico en Spotify

Copyright © 2021 Pórtico Mx

ORgullosamente un diseño y desarrollo de Omar Reyes