- Estado

- Municipios

- México y el Mundo

- Política

- Opinión

Trending Tags

- Más…

Trending Tags

- Estado

- Municipios

- México y el Mundo

- Política

- Opinión

Trending Tags

- Más…

Trending Tags

MINUTO A MINUTO

NOTICIAS DEL ESTADO

Deliciosa muestra gastronómica de estudiantes de la Universidad EDUCEM

Zacatecas,(21-04-2024).-Lo primero que cocinó Roberto siendo un niño fueron unos huevos revueltos con jamón, hoy junto a sus compañeras y...

Leer más..POLÍTICA

SEGURIDAD

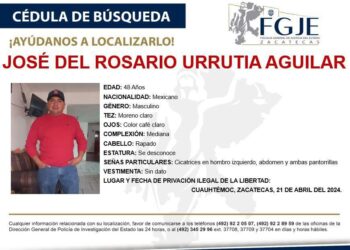

Maestros marchan en Zacatecas por la aparición del “Profe Chayito”; fue secuestrado

Con gritos de Chayo aguanta, los Maestros se levantan, un grupo de ciudadanos marcharon sobre el bulevar metropolitano para exigir la localización del maestro José del Rosario Urrutia Aguilar....

Leer más..LA NOTA DEL DÍA

Maestros marchan en Zacatecas por la aparición del “Profe Chayito”; fue secuestrado

Con gritos de Chayo aguanta, los Maestros se levantan, un grupo de ciudadanos marcharon sobre el bulevar metropolitano para exigir la localización del maestro José del Rosario Urrutia Aguilar. Luego de la desaparición del...

Leer más..EDUCACIÓN

Deliciosa muestra gastronómica de estudiantes de la Universidad EDUCEM

Zacatecas,(21-04-2024).-Lo primero que cocinó Roberto siendo un niño fueron unos huevos revueltos con jamón, hoy junto a sus compañeras y compañeros que se forman como chefs en la Universidad Educem, quienes presentaron su primera Muestra...

Leer más..TINTA INDELEBLE

Código político: Fresnillo, capital del secuestro

Por Juan Gómez Con una población de aproximadamente 240 mil habitantes y ubicada en el centro norte del pais, Fresnillo,...

No existe democracia donde hay miedo

A 46 días de que se lleve a cabo la elección más grande de la historia de México, en el...

ENTRESEMANA/ El pobre exministro del presidente

Por MOISÉS SÁNCHEZ LIMÓN O lo que es lo mismo: “quieren bajarme del barco a como dé lugar”, según se...

MÉXICO Y EL MUNDO

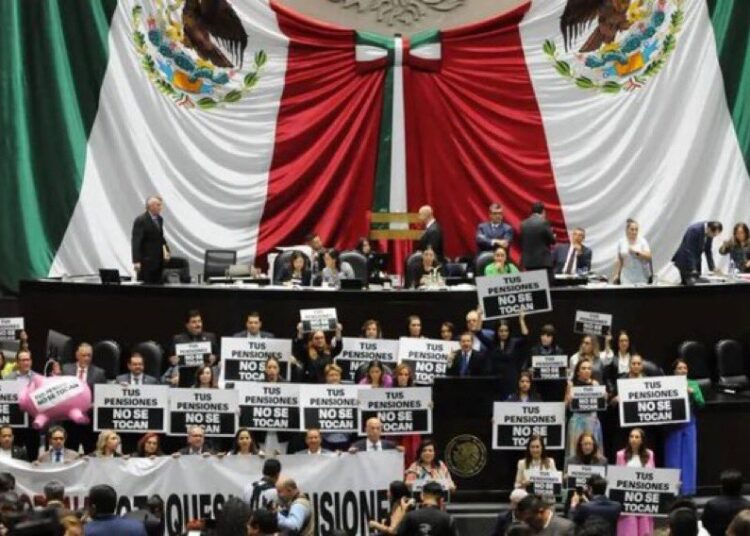

Se aprueba en lo general, la reforma por la que se crea el sistema de Pensiones para el Bienestar

Este lunes 22 de abril fue aprobado en el pleno de la Cámara de Diputados, la reforma por la que...

Retén de encapuchados que se acercó a Sheinbaum “fue un montaje”, asegura AMLO

El presidente Andrés Manuel López Obrador aseguró que el retén que se acercó a Claudia Sheinbaum en Chiapas se trató...

El Tiempo

Las populares de la semana

-

Ahora es en Ciudad Cuauhtémoc donde protestan por el secuestro de un maestro

0 Interacciones -

Se unen candidatos del PT y PES a regidurías en Guadalupe, a la campaña de Pepe Saldivar

0 Interacciones -

Maestros marchan en Zacatecas por la aparición del “Profe Chayito”; fue secuestrado

0 Interacciones -

Código político: Fresnillo, capital del secuestro

0 Interacciones -

Liberan a 4 jóvenes secuestrados; una era mujer de 18 años

0 Interacciones

Pórtico en Spotify

Copyright © 2021 Pórtico Mx

ORgullosamente un diseño y desarrollo de Omar Reyes