- Estado

Trending Tags

- Municipios

- México y el Mundo

Trending Tags

- Política

- Opinión

Trending Tags

- Más…

Trending Tags

- Estado

Trending Tags

- Municipios

- México y el Mundo

Trending Tags

- Política

- Opinión

Trending Tags

- Más…

Trending Tags

MINUTO A MINUTO

NOTICIAS DEL ESTADO

Cae el hermano de “El Mencho”, en Jalisco

Abraham Oseguera Cervantes, alias “Don Rodo”, hermano de Nemesio Oseguera “El Mencho” fue detenido la madrugada de este domingo en...

Leer más..POLÍTICA

SEGURIDAD

Cae el hermano de “El Mencho”, en Jalisco

Abraham Oseguera Cervantes, alias “Don Rodo”, hermano de Nemesio Oseguera “El Mencho” fue detenido la madrugada de este domingo en la localidad Autlán de Navarro Jalisco. La detención se efectuó...

Leer más..LA NOTA DEL DÍA

¡Otra vez! Fresnillo y Zacatecas en los primeros lugares de ciudades con mayor percepción de inseguridad

Fresnillo y Zacatecas siguen siendo de las ciudades más inseguras del país. Frenillo es la ciudad con mayor percepción de inseguridad en México, mientras que Zacatecas es la tercera, según informó este jueves el Instituto Nacional de Estadística...

Leer más..EDUCACIÓN

Maestros estatales demandan aumento salarial y toman las instalaciones de la SEC

Esta mañana docentes de preparatorias estatales tomaron la Secretaría de Educación del gobierno del estado, en demanda de certeza laboral, salarios dignos y otorgamiento de plazas a maestros con más antigüedad. José Antonio Villa ,docente...

Leer más..TINTA INDELEBLE

Código político: Fresnillo, capital del secuestro

Por Juan Gómez Con una población de aproximadamente 240 mil habitantes y ubicada en el centro norte del pais, Fresnillo,...

No existe democracia donde hay miedo

A 46 días de que se lleve a cabo la elección más grande de la historia de México, en el...

ENTRESEMANA/ El pobre exministro del presidente

Por MOISÉS SÁNCHEZ LIMÓN O lo que es lo mismo: “quieren bajarme del barco a como dé lugar”, según se...

MÉXICO Y EL MUNDO

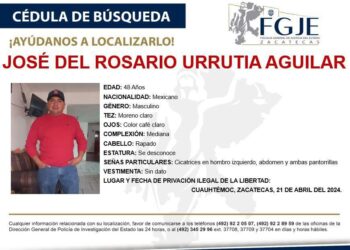

Maestros marchan en Zacatecas por la aparición del “Profe Chayito”; fue secuestrado

Con gritos de Chayo aguanta, los Maestros se levantan, un grupo de ciudadanos marcharon sobre el bulevar metropolitano para...

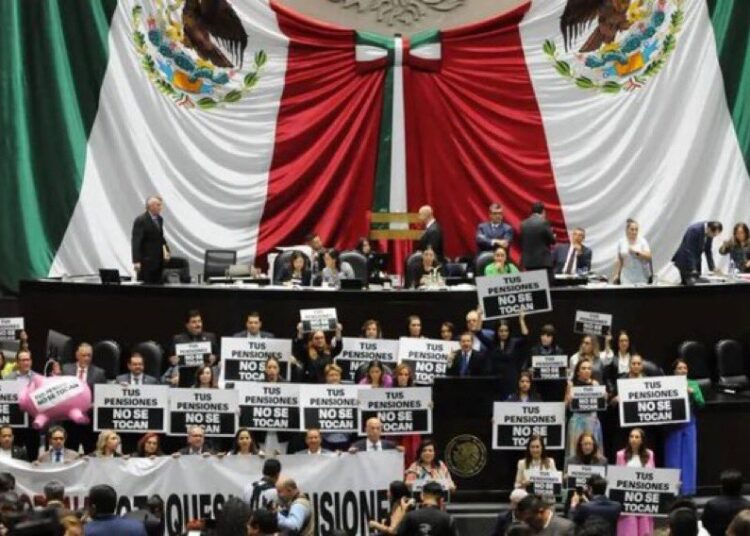

Se aprueba en lo general, la reforma por la que se crea el sistema de Pensiones para el Bienestar

Este lunes 22 de abril fue aprobado en el pleno de la Cámara de Diputados, la reforma por la que...

El Tiempo

Las populares de la semana

-

Código político: Fresnillo, capital del secuestro

0 Interacciones -

Ahora es en Ciudad Cuauhtémoc donde protestan por el secuestro de un maestro

0 Interacciones -

Cae el hermano de “El Mencho”, en Jalisco

0 Interacciones -

Liberan a 4 jóvenes secuestrados; una era mujer de 18 años

0 Interacciones -

Maestros marchan en Zacatecas por la aparición del “Profe Chayito”; fue secuestrado

0 Interacciones

Pórtico en Spotify

Copyright © 2021 Pórtico Mx

ORgullosamente un diseño y desarrollo de Omar Reyes